Interfaces of Future

Early computers were people just like you and me. Only terribly good at math. Then came mechanical devices that were less error-prone, required no bathroom breaks, and did away with small talk entirely. So, of course, eventually, they replaced the humans. No matter how skilled the human computers were, you’d interface with them the same way you would with your mother or a janitor, via natural language. But as the computers mechanized, they warranted newer interfaces. You couldn’t just talk to them. Interacting with them meant learning to use punch cards, teletypes, and command-line interfaces. Needless to say, they were difficult to operate for laymen. It was clear if the adoption of computers was to scale, their interfaces had to become more accessible. In the 1970s, researchers at Xerox PARC, like Alan Kay and Douglas Engelbart, pioneered Graphical User Interfaces (GUIs). Operating systems with GUIs were more intuitive. These graphical representations were revolutionary—what’s second nature to us now was a breakthrough back then. Instead of treating the computer as this foreign object that one must learn the ins and outs of, it was designed to be your Desktop — containing your files, folders to organize these files into, a trash bin to remove files you don’t need anymore. These skeuomorphic idioms and abstractions are what ultimately made The Computer a household object.

Over time, the user interfaces became more intuitive and streamlined. You’d rarely visit a website now and not know how to interact with it (unless the website is, of course, an awwwward winner (opens in a new tab)). And there’s a strong reason for that. We’ve collectively developed a new language to interface with machines as you would interface with a fellow human being. Only most of us can only understand this language, not wrangle with it. Despite open network protocols and open-sourced tech software, we see only a handful of massive online platforms and proprietary tools. Reading and writing programming languages are specializations, much like they were for natural language before the printing press was invented. Those who could write books back then held places of power much the same way those who can develop and refashion software hold today.

This is by no means a novel insight. End-user programming has been the holy grail of the software community: anyone being able to command computers to their will. Alan Kay touched on this idea in 1984 (opens in a new tab) when he wrote, “We now want to edit our tools as we have previously edited our documents.” Since then, the idea has manifested in low-code tools like Spreadsheets, Zapier, Scratch, and iOS shortcuts. Today, LLM-powered chat interfaces manifest this age-old vision of End-user programming. In this essay, I want to examine how we would interface with our tools in the future in the context of AI.

Tools

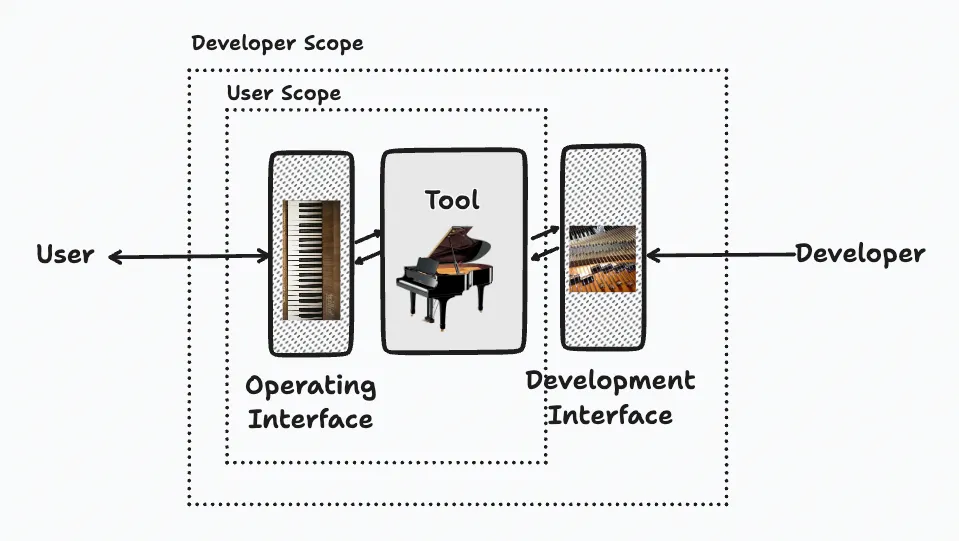

To lend some structure to our discussion, it's worth taking a closer look at the nature of the tools themselves. In general, a tool can be thought of as having two distinct interfaces: an operating interface and a development interface. Consider the Piano in your living room, for example. You operate it by playing the keys and pressing the pedal. But a Piano technician can develop it — tune and replace the strings, adjust the hammers and dampers, and refurbish the exterior. Say one odd day, if you woke up wanting to replace all your Piano strings with plasma (opens in a new tab), you likely couldn’t do it despite years of training in the instrument. This is because the interface you operate on and the interface you develop with are usually abstracted away from each other. Thus knowing how to operate a tool may not imply knowing how to develop it. Historically the reason for such an abstraction has been the gap between the development abilities of a user and a developer. But we’re at an inflection point in software where you could develop software with little to no need for programming skills. Introducing On-Demand Interfaces that users can create and modify according to their needs and preferences.

Development Interfaces

Say, you’re throwing a Dungeons & Dragons party, and you must build an RSVP form with a Sorting Hat functionality. Your current workflow might look something like this: You use Google Forms to collect the RSVP info, Google Sheets to store it, App Script to automatically generate a random character and house, and Zapier to send invitees an email with their house info. Instead of doing all that, you can now quickly bootstrap a Vercel app to achieve the same functionality with much more customization in under 2 minutes.

These examples are basic, if not contrived. Still, they hint at a future where people can create tools instead of just composing them. In this future, customization and accessibility aren’t afterthoughts but are baked right into the product design. It’s important to note that despite recent advances in state of the art, creating complex pieces of software still very much remains a human pursuit. LLMs will only enable us to exhaust all the low-hanging fruits much faster than we could. Every social media app or todo-list app that can be thought of, will be made. What a time to be alive!

Operating Interfaces

How we operate these tools is changing as well. There’s active work being done in developing headless Interface Agents (opens in a new tab) that can browse the web for you and perform tasks like booking the cheapest flight ticket to Hawaii or finding directions to the nearest woman-owned bakery. Some notable examples are Nat Friedman’s Natbot (opens in a new tab), Adept’s ACT-1 (opens in a new tab), Adobe’s Intelligent Agent Assists (opens in a new tab), and Lasso.AI. As you can sit back and look at these models operating your computer, you can’t resist thinking, “Why even bother watching it”?

Reliability

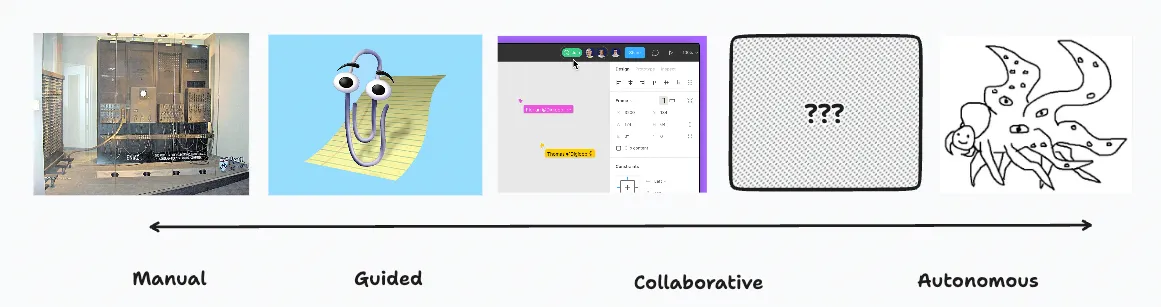

These are cool demos, and eventually, when the underlying models become equally general and intelligent as humans, we can wrap our entire operating systems in cloud instances. But we’re not there yet. These interfaces are reaching for full autonomy prematurely. To understand why, let’s take a detour and talk about the evolution of cars. In the early days of automobiles, they were entirely manual. They were operated by hand-crank engines, required drivers to shift gears manually, and the car was as clueless in the wild as the driver driving it without a map. Eventually, as more and more people drove cars, the ecosystem improved. We got concrete roads, street signs, rumble strips, and yellow lines. All to guide the driver to their destination safely. Now, cars incorporate tech that enables collaboration between the driver and the vehicle: adaptive cruise control, parking sensors, lane-keeping assist, and GPS navigation. Far enough into the horizon, we will move into Full Autonomous vehicles. There’s a trend here that is by no means limited to automobiles. Operating Interfaces evolve in a certain fashion: they go from manual to guided to collaborative and, finally, to autonomous. Users give up control not because the technology is new but because the new technology is reliable. LLM-powered apps are no different. Our interfaces should now move from being manual and semi-guided to being between fully-guided and collaborative. However, this is in stark contrast to the recent experimental releases like AutoGPT (opens in a new tab) and babyAGI (opens in a new tab).

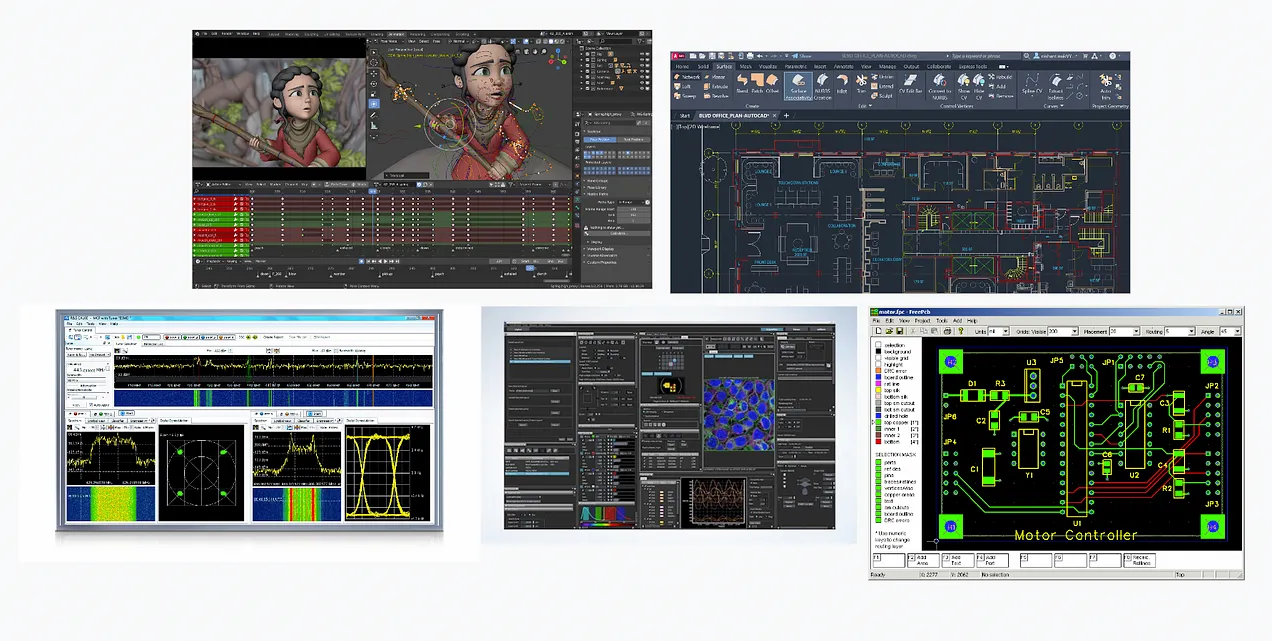

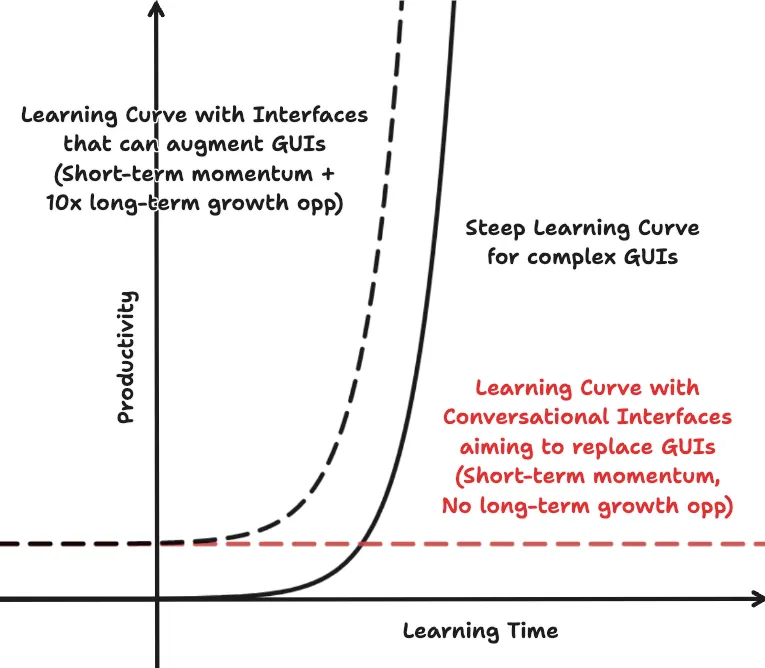

LLM-powered language interfaces (LUI) (opens in a new tab) are promising but have their limitations. To understand them, we must first appreciate the advantages of GUIs. There are software tools that are easy to learn, like the Substack portal on which I’m writing this essay or the Twitter platform on which you most likely found this essay. Then there are software tools for PCB Design, DNA Sequencing, 3D Architecture, Computer Graphics, and Audio Processing. They all have a steep learning curve. That is to say, making progress as a novice is extremely hard, but once the user hits a certain tipping point, the productivity increase is exponential. It just hits.

Minimum Viable Complexity

The key to this rapid increase in productivity is that users develop Muscle Memory (opens in a new tab) with practice. They internalize the key workflows and visual syntax of a GUI and are able to do stuff effectively, ultimately becoming one with the tool. This is where language interfaces fall short. Compared to the interfaces of Blender and AutoCAD, language interfaces are ✨ simple ✨ but at the expense of the functionality to the extent they are not even viable. For instance, imagine trying to edit a complex video using a language interface. While it may be possible to perform basic actions like adding preset effects, more advanced tasks such as scrubbing, trimming video, color grading, and adjusting EQ would likely be cumbersome and inefficient compared to using a GUI specifically designed for video editing. The interfaces aren’t complex because of bad design…waiting for an LLM wrapper to simplify them. Instead, they’re complex because what they’re designed to do is complex. Simplifying interfaces for the sake of simplicity risks reducing the tool's capability and user control. Consider the user experience with a chatbot that bullishly forces you to give up low-level control while occasionally requiring users to take over the automated workflow if it fails. It’s comparable to the user experience of a no-code tool asking users to debug the autogenerated code. This is why I believe language interfaces at the moment are going to be most effective when augmenting existing low-level interfaces rather than replacing them.

Need For Thought

Could a painter articulate with great detail their painting before they paint or an author the prose they’ll write before they ever put pen to paper? Drawing, or any other creative process for that matter, can be thought of as recursively taking detours until you reach a destination you’re happy with. Bret Victor, in his talk "Humane Representation of Thought (opens in a new tab)," observed that the ability to articulate an idea often lags behind one's understanding of it. Language interfaces inadvertently force users to actively think and articulate before taking action. They may only be suitable for tasks of linear nature with more room for rut than creativity. For more creative jobs like manually creating digital art, manipulating low-level tools must be the way to go.

Interfaces are not designed to serve everyone. Tools like iPhone Camera and Notes App favor the beginner over the power user. They are easy to pick up, but offer little growth opportunity. On the other hand, interfaces of the likes of AutoCAD and Blender admittedly favor the power user (opens in a new tab) over the novice. They are hard to pick up but offer tremendous growth opportunity. Language interfaces, in their current design, seem to struggle to serve neither the novices nor the power users. To fully utilize language interfaces, users must prompt them correctly to achieve the desired outcome. This presents a challenge for novices, who might not know the right keywords and workflows to articulate their intent effectively as a power user, while a power user, on the other hand, would much faster accomplish the goal with his developed muscle memory than plan and articulate each step along the way.

Closing Remarks

Interfaces evolve with technology. Many shortcomings I’ve described in this essay don’t relate to the interfaces directly but in the context of the generality of their underlying models. If these models continue to improve at the current rate, there will come a time when they will be able to just read our minds (opens in a new tab) (maybe?), and we can safely treat them as black boxes (opens in a new tab), but until then, Point-and-click GUIs are here to stay. We can maybe delegate the “pointing and clicking” to an AI assistant, but we can’t proverbially draw the curtain on it, yet. The interfaces must be designed to be guided or collaborative at the current stage, not entirely autonomous until the models are reliable enough to take over completely.

I still don’t think we fully appreciate the future in which anyone can develop and operate the software. The advent of LLMs, funnily enough, appears to be a full-circle moment for Human-Computer Interfaces. We started with natural language to communicate with human computers, and now, we might return to using natural language to interact with computers. As though GUIs were just a blip in time until the technology had caught up. And, perhaps, it was.